Montana State University was a member of the 2014 cohort of the eResearch Network.

At our university– as at many institutions– we spend a great deal of time counting research in dollars. What we do not spend much time doing is tracking and celebrating the research itself. We count grants applied for, grants funded, and grants renewed; we track million-dollar grants down to the penny and celebrate their existence. So, as an outsider looking in, you would assume that grant money was our reason for existence. And any faculty member worth their startup funds will tell you that research output is important, but especially when in the tenure or hiring process. Last year, we found out that no one on our campus knew about our research in aggregate: the information was piecemeal at best, hidden on department web pages and out of date CVs.

Why should we collect this information? Beyond celebrating research output as a campus, this information is useful for metrics, accreditation numbers, and outreach. We aimed to collect this information to highlight faculty research output and gain understanding of the work produced on our campus.[pullquote2 quotes=”false” align=”right”]At our university– as at many institutions– we spend a great deal of time counting research in dollars. What we do not spend much time doing is tracking and celebrating the research itself. [/pullquote2]We also found local uses for the citation information; for example, when our Institutional Repository (IR) was created, the library included a metric in its strategic plan to “Optimize the ScholarWorks Institutional Repository (IR) to hold 20% of scholarly output by 2018.” With no specific guide on how to measure where 100% was, we planned to look to the self- reporting through annual reviews: that was delayed at best (2014 data in July 2015) and hugely incomplete at worst (entire colleges refused to participate, even though reporting is tied to merit raises).

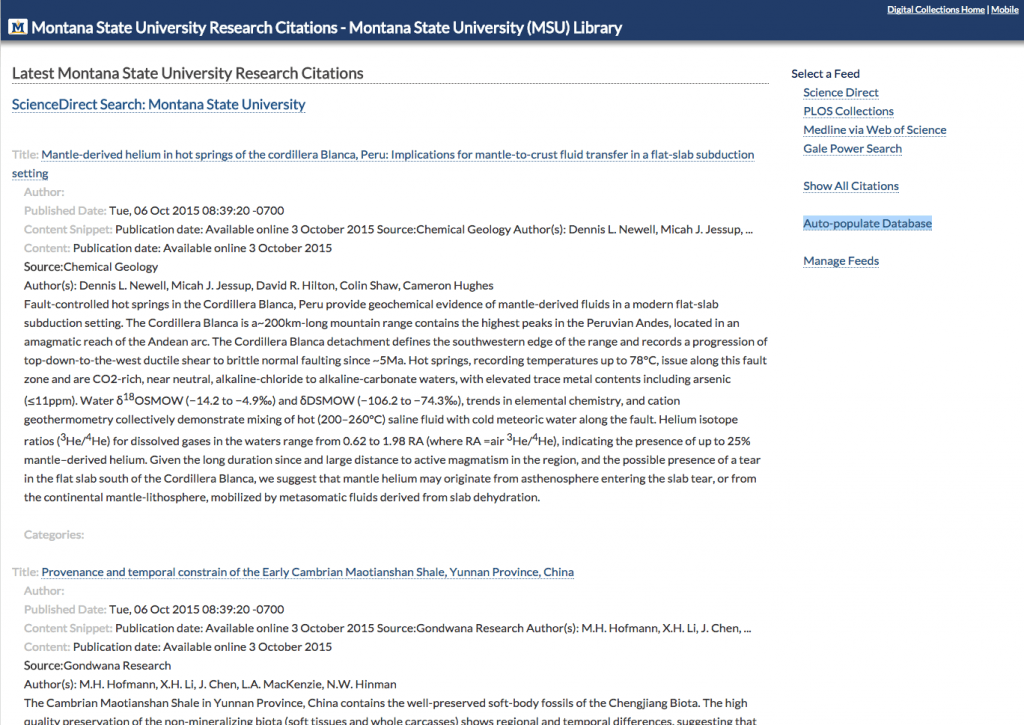

In order to know the scholarly output of the university, we needed a better strategy. We found no open source tool to collect the citation information from faculty publications (Symplectic Elements does a good job, at a price), so the Montana State University (MSU) Library built a research citation app to capture citation information from various academic databases using RSS feeds and alerts (https://arc.lib.montana.edu/msu-research-citations/). The research citation application is built on the principle of treating each citation as an item that can be searched, browsed, exported, etc. The complete data model and the primary files are available on the msulibrary github account. One of the novel approaches to the app was building an ingestion interface that used these external data sources to collect related MSU research citations. Typically, these were RSS feeds with “msu” and “montana state” pattern matches. These feeds were not always perfect so we worked with James Espeland (one of our lead developers) to build a review interface.

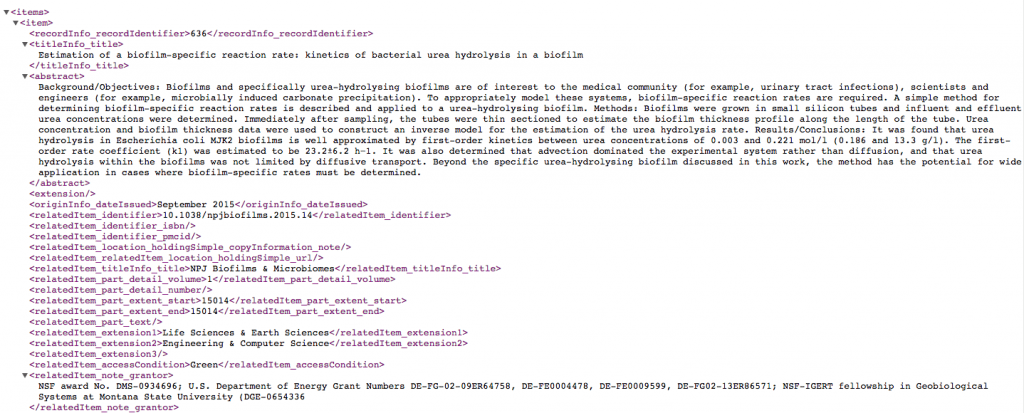

The library was not the only unit on campus interested in this information. During each stage of software development, new partners with a desire for the citation data emerged. Our Research Office, the Office of Planning and Analysis, and department heads were all interested in publicizing research output: the citation information we gather will be used to celebrate research, track the outcomes of grants, and integrate with assessment tools. It was clear that the data could add value, but we had to figure out how to make the data harvestable and reusable. We turned toward a structured data and API solution to help our potential partners.

(Example API response: http://arc.lib.montana.edu/msu-research-citations/api?v=1&date=2015-09&format=xml)

In the library, we also use this up-to-date publication information to contact individual authors about IR submissions. This has increased our deposit rate in our IR and the number of researchers represented in the tool.

Scholarly Communication librarians are often found approaching researchers one by one advocating for open access, collecting CVs, talking to [pullquote2 quotes=”false” align=”right”]Borrowing the marketing idea of a “pain point,” we chose instead to hit researchers at a “celebration point.”[/pullquote2]

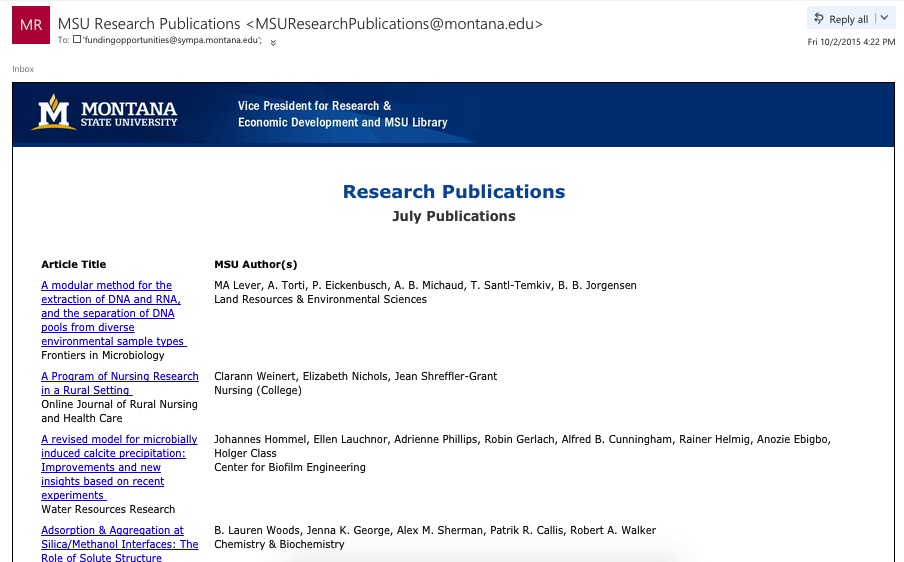

departments at meetings, and imploring researchers to share their three-year-old postprints. This is time-consuming and may create one-time interactions that are difficult to translate into enduring relationships. Borrowing the marketing idea of a “pain point,” we chose instead to hit researchers at a “celebration point.” If we can contact (usually by email) an author and ask for the appropriate version of an article at or near the time of publication, we have found we are much more likely to be able to add that item to our IR.

While this is not the first or only attempt to collect and utilize citation information at the university level[ref]The JISC Publications Router is a promising data feed initiative looking to automate the delivery of research publications info – http://broker.edina.ac.uk/. We have looked into using the Router as one of our data feeds, but there is a short lag between publication and ingestion into the Router that limits the utility of the Router as a real-time data source for citations. Regardless, this is valuable work from JISC and the University of Edinburgh and the quality of the feeds is very high (including full citations and consistent metadata).[/ref], we aim to continue to refine and disseminate our app to simplify the often lengthy, error-prone, or expensive task with which scholarly communication teams or research offices are increasingly tasked. We believe that our app will to bring together multiple information sources in a user- and machine-friendly interface to facilitate the API- driven harvesting, ingest, and reuse of citation data. We hope that this will continue to increase repository deposit rates from faculty and that we will be able to use the resulting citation data to enable new library services that reach broadly across the institution—that citation collection will drive research infrastructure progress.

Our project implementation enables the integration of IR activities into broader discussions about research on campus. We are able to use data that was exclusively used by the IR in new ways that enrich and enable other aspects of our research enterprise. As one example, we are able to produce an email output that the Vice President of Research, in partnership with the library, can distribute to all of campus.

We are positioning citation collection as the data engine that drives scholarly communication, deposits into our IR, and assessment of research activities. More importantly, we feel we are in the first stages of this project. The data we have collected has obvious value for promotion and generating research deposit leads for scholarly communication, but there are other uses we are considering. One tangible, workable result we have gained through our citation collection and reuse model is an understanding of what constitutes a good data feed (consistent, structured data) and knowledge of best sources for these data feeds (aggregators like PLOS, Medline, and the JISC Router). We are anticipating network analysis research as we connect a linked data graph to this citation information and are looking forward to textual analysis by applying data mining techniques to the abstracts we have collected, which will allow us to get a better understanding of the research topics here on campus. In many ways, the citation data is just the beginning and we are looking forward to future partnerships and projects.